UCLA engineers have developed a noninvasive brain-computer interface system that uses artificial intelligence to interpret user intent. This makes assistive technologies faster, more intuitive, and more accessible for people with movement disorders. The system acts as a kind of co-pilot, combining brain signals with visual context to help users complete tasks like moving a robotic arm or controlling a computer cursor.

Traditional brain-computer interfaces rely solely on decoding electrical activity from the brain, often using EEG. While effective, this approach can be slow and error-prone, especially for complex tasks. The UCLA team added a second layer, a camera-based AI that observes the environment and predicts what the user is trying to do. By fusing these two data streams, the system can anticipate intent and assist accordingly.

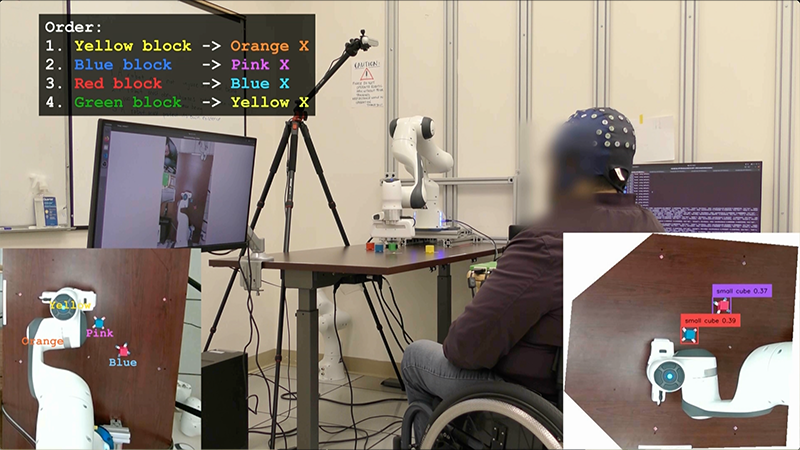

In trials, participants including one with paralysis performed tasks significantly faster with the AI co-pilot. For example, the paralyzed participant was able to complete a block-moving task with a robotic arm in under seven minutes using the AI-enhanced system, but could not complete it at all without the AI’s help. This shared-autonomy model allows the system to fill in gaps when brain signals are ambiguous, making the interface more reliable and user-friendly.

The technology is entirely noninvasive, meaning it does not require brain implants or surgery. This makes it more accessible to a wider range of users, including those who may not qualify for or want invasive procedures. It also opens the door to broader applications in rehabilitation, prosthetics, and everyday assistive devices.

The research team, led by Professor Jun Chen, sees this as a step toward more natural human-machine interaction. By combining neural data with environmental cues, the system mimics how people make decisions using both internal intent and external context. It is a more holistic approach to brain-computer communication.

Take a look at this video from UCLA explaining more about the technology:

Article from UCLA: AI Co-Pilot Boosts Noninvasive Brain-Computer Interface by Interpreting User Intent

Abstract in Nature Machine Intelligence: Brain–computer interface control with artificial intelligence copilots