For over a century, surgical microscopes have relied on a simple trick: mimic human binocular vision to create depth perception. But while this stereoscopic approach helps surgeons navigate delicate anatomy, it falls short when it comes to precision measurement, automation, or real-time 3D reconstruction. Enter the FiLM-Scope—a new surgical microscope developed by researchers at Duke University that uses 48 tiny cameras to generate detailed 3D maps of the surgical field, live and in color.

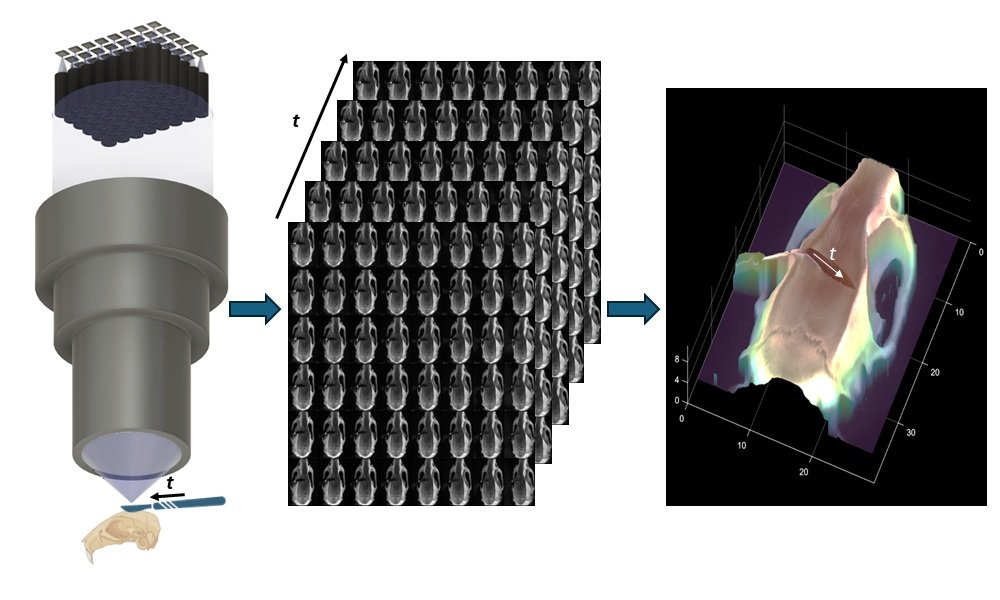

The system, short for Fourier lightfield multiview stereoscope, arranges 48 microcameras in a grid behind a single high-throughput lens. Each camera captures the scene from a slightly different angle, producing a stack of 12.5-megapixel images that are stitched together by a self-supervised algorithm. The result: a dense, real-time 3D reconstruction with 11-micron depth precision across a 28 x 37 mm field of view. And because the system captures all perspectives simultaneously, surgeons can digitally pan, zoom, or refocus without physically moving the microscope.

This leap in intraoperative imaging could be a game-changer for both manual and robotic microsurgery. Unlike pre-op CT or MRI scans, FiLM-Scope updates in real time, adapting to tissue shifts and tool movements. It also outperforms optical coherence tomography (OCT) in coverage and interpretability, offering full-color, high-resolution views without sacrificing speed.

Beyond the OR, the technology could find applications in microfabrication, materials science, and any field that demands high-fidelity 3D visualization. But its most immediate impact may be in giving surgeons—and surgical robots—a clearer, more measurable view of the body’s most intricate terrain. It’s not just a microscope. It’s a live-action depth map, built from light and angles.

Article from SPIE: New surgical microscope offers precise 3D imaging using 48 tiny cameras

Abstract in Advanced Photonics Nexus: Fourier lightfield multiview stereoscope for large field-of-view 3D imaging in microsurgical settings